|

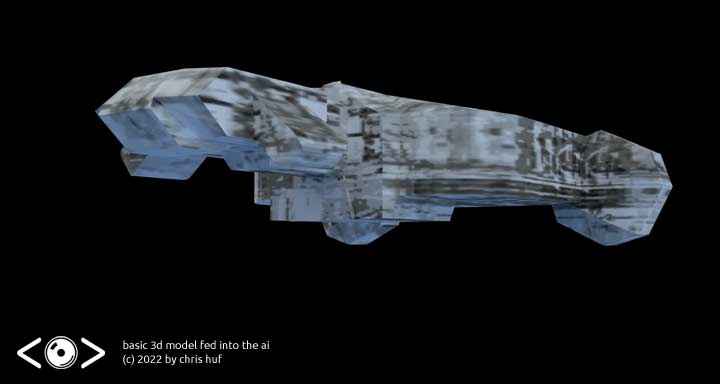

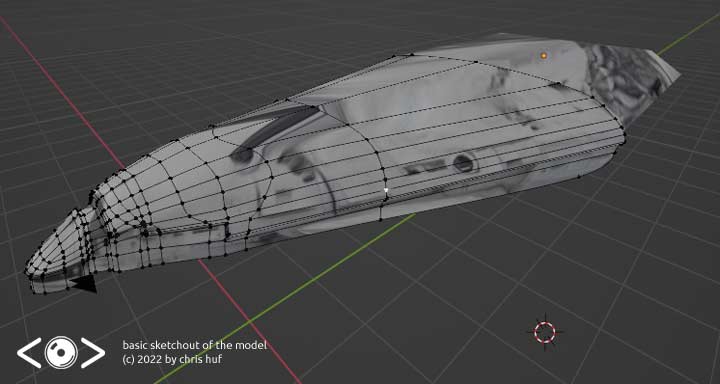

Originally I was not that impressed when stable diffusion was released for blender, but this changed quickly when I found out that if you provide the ai with a basic standin geometry render, wich provides some basic shading and some perspective clues, you can pretty much automate

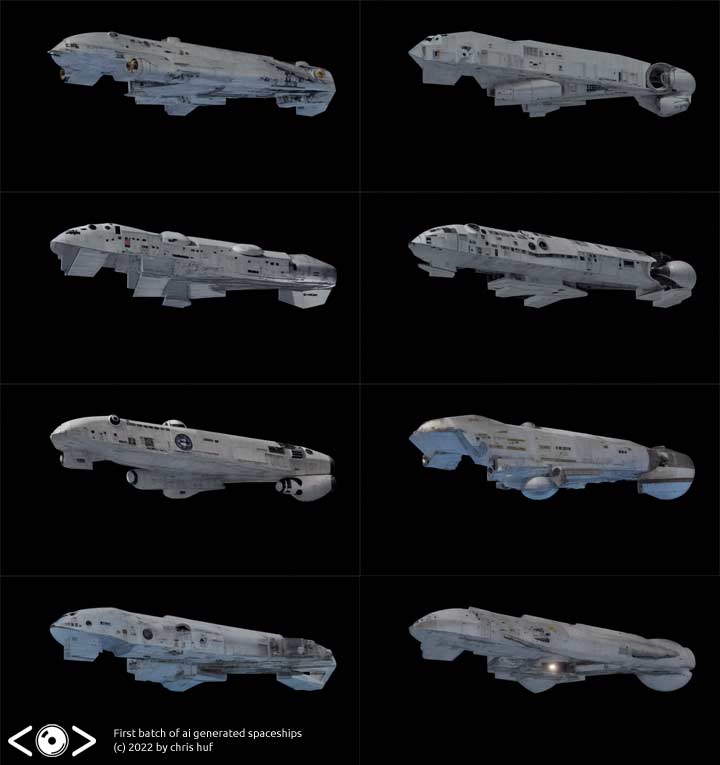

Now I feed this into dream texture and generate an ai image with a strength around 0.44 to .52 Since it provides perspective clues, I can make a dozend variations of it, all with the same perspective

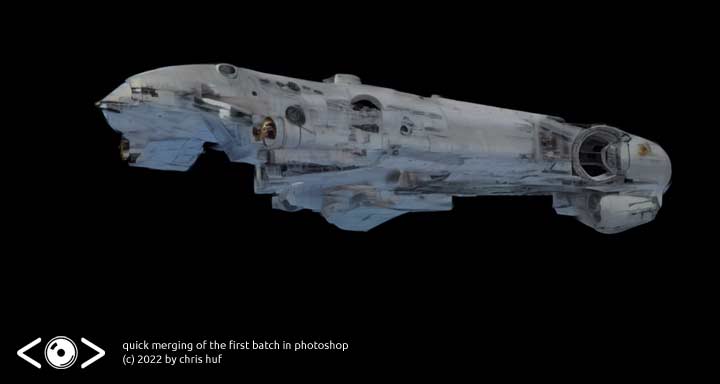

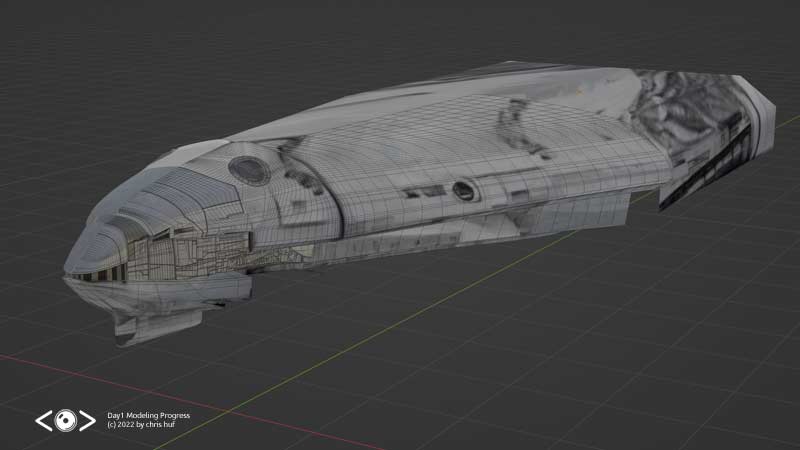

Now I can put them into one photoshop file and use a coarse eraser brush to keep the parts of each image I like to get a nice concept in no time

After this I feed THIS image into the AI and generate a bunch of variations

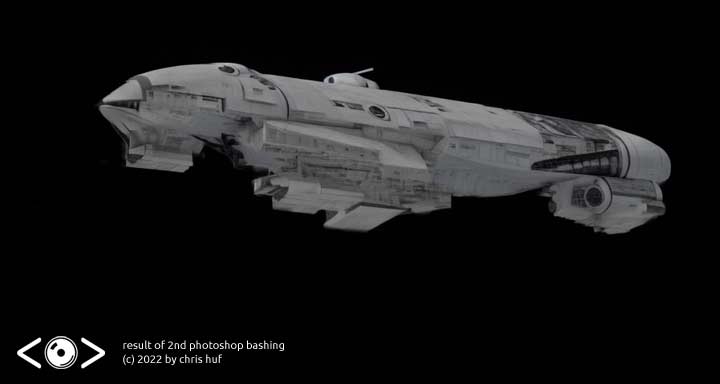

And again in photoshop, merge the parts I like

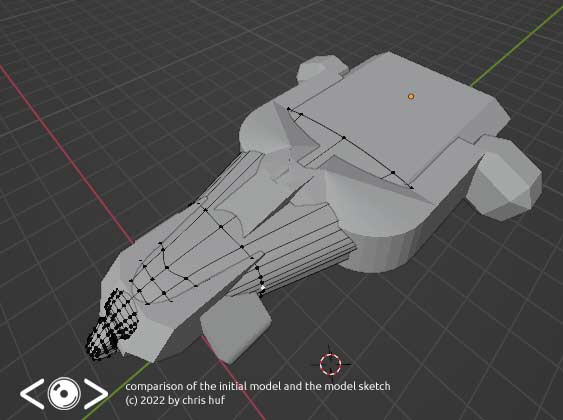

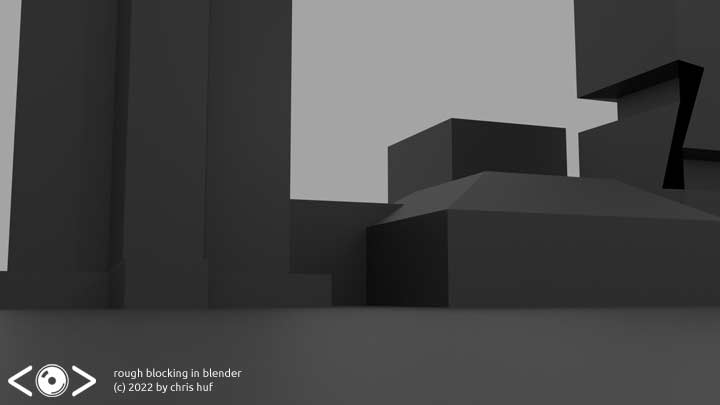

Now I can use all those little tricks I learned from transfering sketches into 3d models in blender to rough out the basic shape (thats where I'm currently at and yes, its ugly.... but this is not about modeling it yet). Point is having the correct camera orientation speeds up the process extremely.

And, just for fun I overlayed the original model and my 3d sketch to see how they compare proportion wise

Point is, you can not only art direct the ai, but also use the input and output to build up complexity in no time.

And of you go: A concept image wich matches some standins to start your model procedure.

|

|

New website up After a long time working with my own custom joomla version, I decided its time to give it a slight overall (Especially for working with bigger resolutions Please be aware that some old stuff is still missing until I shift all stuff to the new server |